Artificial intelligence (AI) is no longer a futuristic concept. It is part of our daily lives, from virtual assistants to self-driving cars and smart devices. At the heart of this AI revolution are AI Processors specialized chips designed to handle complex AI tasks efficiently. These chips are transforming industries, powering innovations, and enabling machines to “think” faster and smarter.

In this article, we explore the 10 best AI processors that are shaping modern technology. We will discuss their features, uses, and why they stand out in the world of AI computing.

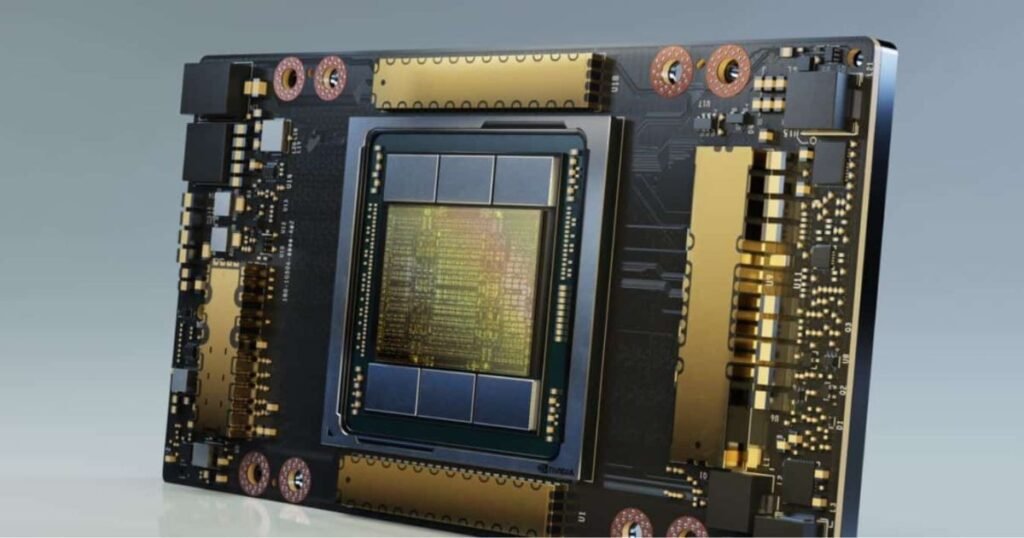

1. NVIDIA A100

The NVIDIA A100 is a flagship AI processors designed for high-performance AI computing.

Key Features:

- Built on the Ampere architecture.

- Supports Tensor Core technology for deep learning.

- Exceptional performance for large AI models.

Pros:

- Exceptional performance for AI and deep learning.

- Supports large-scale AI models efficiently.

- Optimized for AI computing in enterprise environments.

Cons:

- Very expensive.

- High power consumption for heavy workloads.

- Requires expert setup and knowledge.

Applications:

- Cloud computing and data centers.

- Machine learning training and inference.

- Scientific simulations and AI research.

The A100 is considered one of the most powerful AI chips in the market, offering unmatched speed and efficiency for enterprise-level AI tasks.

2. Google TPU v4

Google’s Tensor Processing Unit (TPU) v4 is designed specifically for AI workloads.

Key Features:

- Optimized for TensorFlow AI models.

- High throughput for AI computing.

- Energy-efficient design.

Pros:

- High throughput for AI tasks

- Energy-efficient design

- Optimized for TensorFlow models

Cons:

- Mainly limited to Google Cloud infrastructure

- Not as flexible for third-party AI frameworks

- Requires cloud access for full potential

Applications:

- AI model training in the cloud.

- Large-scale machine learning tasks.

- AI-powered analytics and predictions.

TPU v4 has revolutionized AI computing in data centers, providing faster processing and lower latency for deep learning workloads.

3. Intel Habana Gaudi2

Intel’s Habana Gaudi2 is an AI processor aimed at accelerating deep learning workloads.

Key Features:

- High memory bandwidth for AI datasets.

- Optimized for AI training and inference.

- Low power consumption.

Pros:

- High memory bandwidth for large datasets

- Low power consumption

- Efficient for enterprise AI solutions

Cons:

- Less widely supported than NVIDIA GPUs

- May require specialized software integration

- High initial cost

Applications:

- Enterprise AI solutions.

- High-speed AI model training.

- Cloud AI infrastructure.

With its performance and efficiency, Gaudi2 is becoming a leading choice for AI chips in large-scale AI computing.

4. AMD MI300X

The AMD MI300X is a powerful AI processor designed for advanced AI and HPC (High-Performance Computing) workloads.

Key Features:

- Combines CPU and GPU architecture.

- Supports large-scale AI models.

- Efficient energy management.

Pros:

- High performance for complex AI models

- Efficient energy management

- Supports large-scale AI computing

Cons:

- Expensive for smaller businesses

- Limited availability currently

- Requires specific software support

Applications:

- AI-driven scientific research.

- Autonomous systems.

- Data analytics and AI simulations.

MI300X offers excellent AI computing capabilities, making it ideal for enterprises needing high-speed intelligence chips.

5. Apple M2 Ultra

Apple’s M2 Ultra chip integrates advanced AI processing into consumer devices.

Key Features:

- Neural Engine for AI tasks.

- High-performance CPU and GPU cores.

- Energy-efficient design for desktops and laptops.

Pros:

- Integrated Neural Engine for AI tasks

- Energy-efficient design

- Supports AI-powered applications locally

Cons:

- Limited to Apple ecosystem

- Not suitable for large-scale AI training

- High cost for consumer devices

Applications:

- AI in creative software like video editing and graphics.

- Machine learning apps on Mac devices.

- AI-powered features in macOS and iOS.

Apple’s chip brings AI processor to personal devices, enabling smart AI features without cloud dependency.

6. Graphcore IPU-M2000

The Graphcore IPU-M2000 is built for AI computing efficiency and flexibility.

Key Features:

- Specialized for AI training and inference.

- High parallelism for complex AI models.

- Low-latency computing for AI applications.

Pros:

- High parallelism for deep learning models

- Low-latency AI computing

- Flexible architecture for research

Cons:

- Niche market; fewer developers

- Expensive for general use

- Limited software ecosystem

Applications:

- Research labs and AI startups.

- Natural language processing (NLP).

- Computer vision and robotics.

Graphcore’s intelligence chips are recognized for their innovation in handling advanced AI workloads.

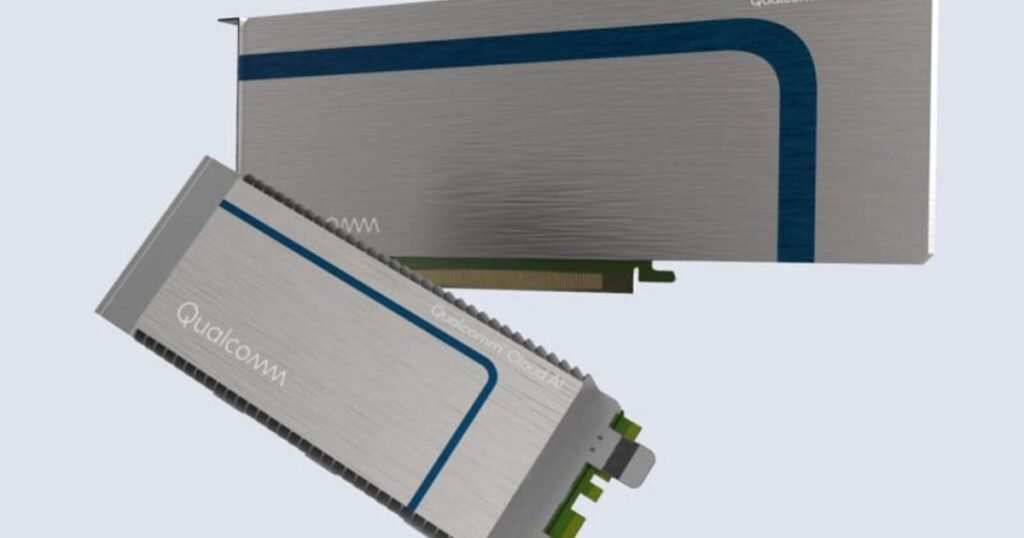

7. Qualcomm Cloud AI 100

Qualcomm’s Cloud AI 100 is designed for scalable AI deployment in data centers.

Key Features:

- Optimized for AI inference tasks.

- Supports high-speed AI computing.

- Power-efficient for large-scale operations.

Pros:

- Efficient AI inference

- Supports large-scale AI computing

- Power-efficient for continuous operation

Cons:

- Primarily cloud-focused

- Not optimized for high-end training

- Limited support for some AI frameworks

Applications:

- Edge AI applications.

- AI-powered cloud services.

- Real-time AI inference in mobile and IoT devices.

Cloud AI 100 provides efficient and fast AI processing for both AI chips in the cloud and edge computing.

8. Huawei Ascend 910

The Huawei Ascend 910 is a high-performance AI processor built for training massive AI models.

Key Features:

- Superior computational power for AI tasks.

- Designed for energy-efficient AI computing.

- Optimized for deep learning frameworks.

Pros:

- Excellent computational power

- Energy-efficient design

- Optimized for deep learning frameworks

Cons:

- Mainly used in Huawei ecosystem

- Expensive hardware investment

- Limited international availability

Applications:

- Large-scale AI research.

- Data center AI operations.

- Autonomous systems development.

Ascend 910 is a leading intelligence chip for enterprises focusing on AI-driven innovation.

9. Cerebras Wafer-Scale Engine 2

Cerebras created the Wafer-Scale Engine 2, the largest AI processor in the world.

Key Features:

- Massive core count for parallel AI computing.

- Ultra-fast processing for deep learning.

- Built for AI research and training large models.

Pros:

- Massive parallel computing power

- Handles extremely large AI models

- Ultra-fast AI processing

Cons:

- Extremely high cos

- Limited use cases for general consumers

- Requires specialized data center infrastructure

Applications:

- Advanced AI simulations.

- Large-scale deep learning research.

- AI-powered scientific discovery.

This processor is a game-changer in AI computing, capable of handling workloads no other chip can manage.

10. Tenstorrent Grayskull

The Tenstorrent Grayskull is an AI processor for next-generation AI applications.

Key Features:

- High-performance intelligence chip.

- Designed for AI training and inference.

- Scalable architecture for multiple AI workloads.

Pros:

- High-performance AI chip

- Scalable architecture for multiple workloads

- Efficient for both training and inference

Cons:

- Emerging technology with limited adoption

- Expensive

- Requires specialized software tools

Applications:

- Machine learning research.

- AI-driven industrial solutions.

- AI-powered software development.

Grayskull is helping push the boundaries of modern AI computing with speed and efficiency.

Benefits of AI Processors

- Faster Computation: AI processors are designed to handle parallel AI tasks efficiently.

- Energy Efficiency: Many AI chips reduce power consumption compared to traditional processors.

- Specialized AI Tasks: They excel at deep learning, machine learning, and neural network computations.

- Better AI Applications: AI processors improve virtual assistants, autonomous vehicles, and AI analytics.

- Scalable AI Computing: Data centers and cloud providers can scale AI models faster using these processors.

Challenges of AI Processors

- High Cost: Top AI processor are expensive to design and purchase.

- Complex Programming: Using AI chips requires specialized knowledge and frameworks.

- Power Consumption: High-performance chips may still consume significant energy.

- Rapid Obsolescence: AI technology evolves quickly, making chips outdated in a few years.

Despite these challenges, AI processors remain critical for advancing AI computing and intelligence chips worldwide.

Tips for Choosing AI Processors

- Determine your AI workload: training, inference, or edge AI.

- Compare performance metrics like FLOPS, memory, and energy efficiency.

- Consider compatibility with AI frameworks like TensorFlow, PyTorch, or ONNX.

- Evaluate scalability for cloud or enterprise deployments.

- Check for cost-effectiveness in terms of total ownership and performance.

Conclusion

AI processors are transforming the way we use technology. From cloud computing and AI research to personal devices and smart applications, these processors make AI faster, smarter, and more efficient. NVIDIA A100, Google TPU v4, Intel Gaudi2, AMD MI300X, and other chips are leading this revolution.

With AI processors, businesses, developers, and researchers can push the boundaries of AI computing, enabling smarter software, automation, and intelligence chips for industries worldwide. Investing in the right AI processor is key to unlocking the future of technology — explore how it’s transforming industries in this Computer Vision in Retail guide.

FAQs

What are AI processors?

AI processors are specialized chips designed to handle artificial intelligence tasks efficiently, such as machine learning and deep learning.

How do AI chips differ from regular CPUs?

AI chips are optimized for parallel computation, enabling faster processing of AI models compared to general-purpose CPUs.

Which AI processor is best for deep learning?

It depends on the task, but NVIDIA A100, Google TPU v4, and Cerebras Wafer-Scale Engine 2 are top choices for deep learning workloads.

Are AI processors energy-efficient?

Yes, many AI processors are designed to reduce energy usage while maintaining high performance.

Can AI processors be used in personal devices?

Yes, chips like Apple M2 Ultra bring AI processing to laptops, desktops, and mobile devices for local AI tasks.